一、鸿蒙调度/LiteOS调度

1

2

3

| //鸿蒙OS调度

https://codechina.csdn.net/kuangyufei/kernel_liteos_a_note/-/wikis/04_%E4%BB%BB%E5%8A%A1%E8%B0%83%E5%BA%A6%E7%AF%87

https://juejin.cn/post/7015965298234753032

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

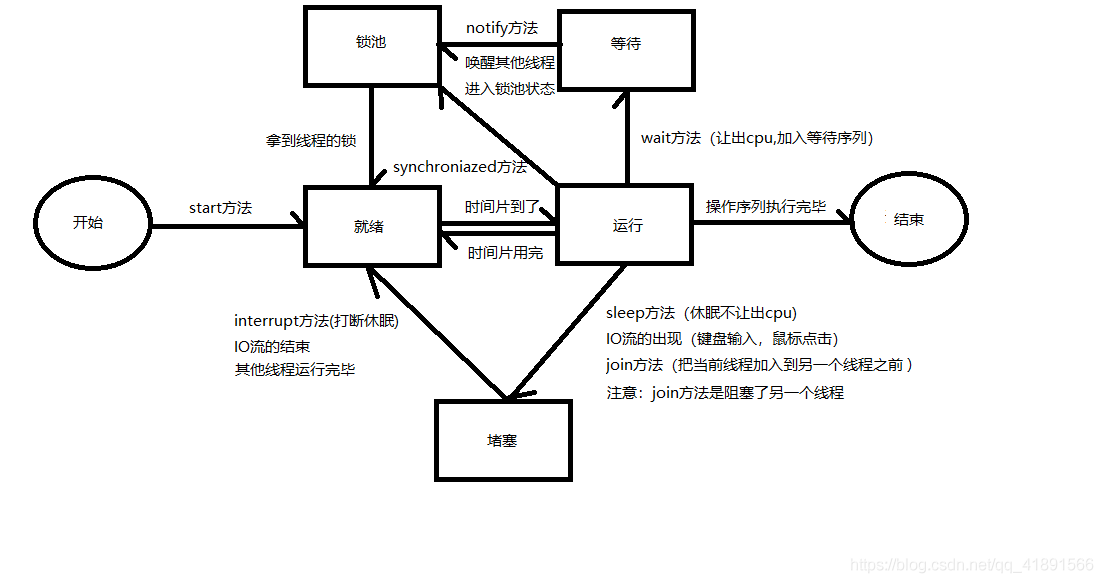

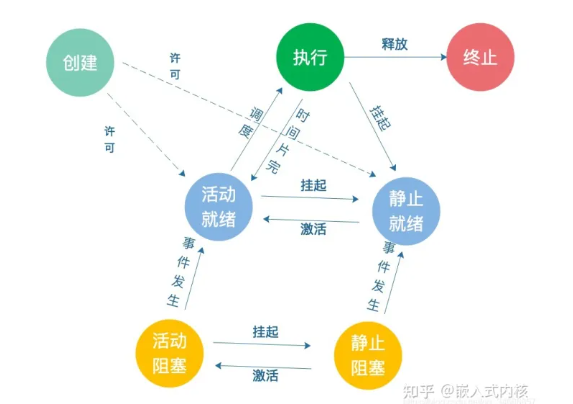

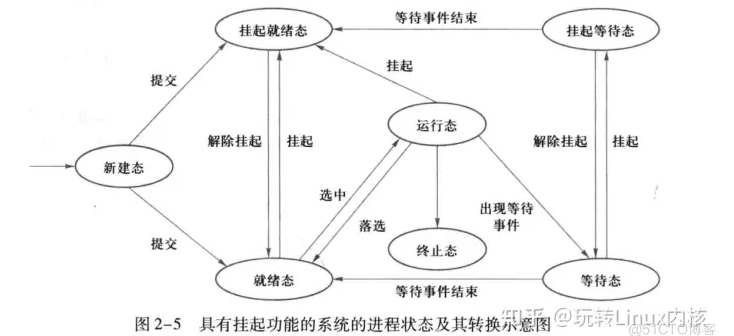

| 线程状态说明:

初始化(Init):该线程正在被创建。

就绪(Ready):该线程在就绪列表中,等待CPU调度。

运行(Running):该线程正在运行。

阻塞(Blocked):该线程被阻塞挂起。Blocked状态包括:pend(因为锁、事件、信号量等阻塞)、suspend(主动pend)、delay(延时阻塞)、pendtime(因为锁、事件、信号量时间等超时等待)。

退出(Exit):该线程运行结束,等待父线程回收其控制块资源。

说LosTaskCB之前先说下官方文档任务状态对应的 define,可以看出task和线程是一个东西。

#define OS_TASK_STATUS_INIT 0x0001U

#define OS_TASK_STATUS_READY 0x0002U

#define OS_TASK_STATUS_RUNNING 0x0004U

#define OS_TASK_STATUS_SUSPEND 0x0008U

#define OS_TASK_STATUS_PEND 0x0010U

#define OS_TASK_STATUS_DELAY 0x0020U

#define OS_TASK_STATUS_TIMEOUT 0x0040U

#define OS_TASK_STATUS_PEND_TIME 0x0080U

#define OS_TASK_STATUS_EXIT 0x0100U

-----https://juejin.cn/post/7015965298234753032

|

Huawei LiteOS 系统中的任务管理模块为用户提供下面几种功能。

| 功能分类 |

接口名 |

描述 |

| 任务的创建和删除 |

LOS_TaskCreateOnly |

创建任务,并使该任务进入suspend状态,并不调度。 |

| |

LOS_TaskCreate |

创建任务,并使该任务进入ready状态,并调度。 |

| |

LOS_TaskDelete |

删除指定的任务。 |

| 任务状态控制 |

LOS_TaskResume |

恢复挂起的任务。 |

| |

LOS_TaskSuspend |

挂起指定的任务。 |

| |

LOS_TaskDelay |

任务延时等待。 |

| |

LOS_TaskYield |

显式放权,调整指定优先级的任务调度顺序。 |

| 任务调度的控制 |

LOS_TaskLock |

锁任务调度。 |

| |

LOS_TaskUnlock |

解锁任务调度。 |

| 任务优先级的控制 |

LOS_CurTaskPriSet |

设置当前任务的优先级。 |

| |

LOS_TaskPriSet |

设置指定任务的优先级。 |

| |

LOS_TaskPriGet |

获取指定任务的优先级。 |

| 任务信息获取 |

LOS_CurTaskIDGet |

获取当前任务的ID。 |

| |

LOS_TaskInfoGet |

设置指定任务的优先级。 |

| |

LOS_TaskPriGet |

获取指定任务的信息。 |

| |

LOS_TaskStatusGet |

获取指定任务的状态。 |

| |

LOS_TaskNameGet |

获取指定任务的名称。 |

| |

LOS_TaskInfoMonitor |

监控所有任务,获取所有任务的信息。 |

| |

LOS_NextTaskIDGet |

获取即将被调度的任务的ID。 |

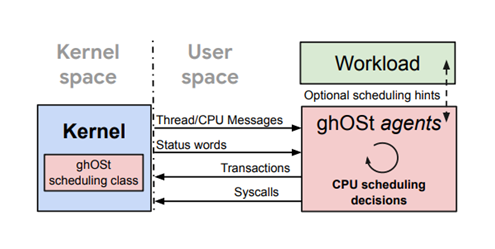

二、ghost调度器

在userspace设计调度代理agent, 内核将线程信息(new、wakeup、block、relax、dead等)通过共享内存方式发送给调度代理agent,调度代理agent实施调度决策,并通过Syscall下发给内核;

ghost调度实现: ghost-kenrel / ghost-userspace

1> ghost-kernel–如何调度切换

1、ghost class timerfd

1

2

3

4

5

6

7

8

9

10

| do_timerfd_settime {

...

#ifdef CONFIG_SCHED_CLASS_GHOST

if (tfdl)

memcpy(&ctx->timerfd_ghost, tfdl, sizeof(struct timerfd_ghost));

else

ctx->timerfd_ghost.flags = 0; /* disabled */

#endif

...

}

|

2、kernel/sched/ghost.c

1> 新增调度类 调度实体….

1

2

3

4

5

6

7

8

9

10

11

12

13

| //新增ghost task和agent的调度类

#define SCHED_DATA \

STRUCT_ALIGN(); \

__begin_sched_classes = .; \

*(__idle_sched_class) \

+ *(__ghost_sched_class) \ //ghost task schedhere

*(__fair_sched_class) \

*(_rt_sched_class) \

*(__dl_sched_class) \

+ *(__ghost_agent_sched_class) \ ///ghost agent sched here

*(__stop_sched_class) \

__end_sched_classes = .;

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

| //ghost调度实体阿

+struct sched_ghost_entity {

+ struct list_head run_list;

+ ktime_t last_runnable_at;

+

+ /* The following fields are protected by 'task_rq(p)->lock' */

+ struct ghost_queue *dst_q;

+ struct ghost_status_word *status_word;

+ struct ghost_enclave *enclave;

+

+ /*

+ * See also ghost_prepare_task_switch() and ghost_deferred_msgs()

+ * for flags that are used to defer messages.

+ */

+ uint blocked_task : 1;

+ uint yield_task : 1;

+ uint new_task : 1;

+ uint agent : 1;

+

+ struct list_head task_list;

+};

|

2> scheduler_ipi

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

| //核间中断处理函数

do_handle_IPI

->scheduler_ipi //IPI_RESCHEDULE

void scheduler_ipi(void)

{

/*

* Fold TIF_NEED_RESCHED into the preempt_count; anybody setting

* TIF_NEED_RESCHED remotely (for the first time) will also send

* this IPI.

*/

preempt_fold_need_resched();

if (llist_empty(&this_rq()->wake_list) && !got_nohz_idle_kick())

return;

/*

* Not all reschedule IPI handlers call irq_enter/irq_exit, since

* traditionally all their work was done from the interrupt return

* path. Now that we actually do some work, we need to make sure

* we do call them.

*

* Some archs already do call them, luckily irq_enter/exit nest

* properly.

*

* Arguably we should visit all archs and update all handlers,

* however a fair share of IPIs are still resched only so this would

* somewhat pessimize the simple resched case.

*/

irq_enter();

sched_ttwu_pending();

/*

* Check if someone kicked us for doing the nohz idle load balance.

*/

if (unlikely(got_nohz_idle_kick())) {

this_rq()->idle_balance = 1;

raise_softirq_irqoff(SCHED_SOFTIRQ);

}

irq_exit();

}

//https://www.codenong.com/cs106431107/ 引用于

scheduler_ipi()函数调用sched_ttwu_pending()函数唤醒pending的任务然后调用

raise_softirq_irqoff()函数发起一个软中断,软中断将在后续的文章中介绍。

IPI_CALL_FUNC:函数smp_call_function()生成的中断,通过调用函数

generic_smp_call_function_interrupt()最终调用函数flush_smp_call_function_queue(),

该函数调用所有在队列中pending的回调函数。flush_smp_call_function_queue()函数的源码可

以在kernel/smp.c文件中可以找到:

http://oliveryang.net/2016/03/linux-scheduler-2/

|

1

2

3

4

5

6

7

8

9

10

11

| //流程1

ghost_latched_task_preempted

->_ghost_task_preempted if ghost_police

->set_tsk_need_resched && set_preempt_need_resched

//流程2

context_switch

->prepare_task_switch

->ghost_prepare_task_switch || pick_next_ghost_agent //pick_next_ghost_agent忽略

->ghost_task_preempted

->_ghost_task_preempted

|

3、new syscall – ghost ghost_run

1

2

| 450 64 ghost_run sys_ghost_run

451 64 ghost sys_ghost

|

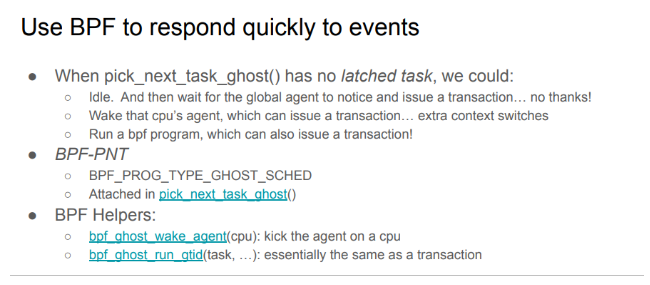

4、bpf

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

| //include/linux/bpf_types.h

BPF_PROG_TYPE(BPF_PROG_TYPE_GHOST_SCHED, ghost_sched, struct bpf_ghost_sched,

struct bpf_ghost_sched_kern)

//kernel/sched/bpf.c

BPF_CALL_2(bpf_ghost_wake_agent, struct bpf_ghost_sched_kern *, ctx, u32, cpu)

{

return ghost_wake_agent_on_check(cpu);

}

//

BPF_CALL_4(bpf_ghost_run_gtid, struct bpf_ghost_sched_kern *, ctx, s64, gtid,

u32, task_barrier, int, run_flags)

{

return ghost_run_gtid_on(gtid, task_barrier, run_flags,

smp_processor_id());

}

//

static const struct bpf_link_ops bpf_ghost_sched_link_ops = {

.release = bpf_ghost_sched_link_release,

.dealloc = bpf_ghost_sched_link_dealloc,

};

|

5、kernel/sched/ghostfs.c

1 提供enclave的创建

enclave机制

简介

传统用法

ghost Enclave

1

2

3

4

| //kernel/sched/ghostfs.c

ghostfs_init

->| ghost_setup_root

->| kernfs_create_root //

|

1

| https://www.binss.me/blog/sysfs-udev-and-Linux-Unified-Device-Model/ //kernfs->sysfs

|

1

2

| //ghost enclave

mount -t ghost /dev/ghost /sys/fs/ghost

|

1

2

| 1、先执行agent-shinjuku

2、执行用例

|

2 提供cpu_data

1

2

3

4

| /sys/fs/ghost/enclave_1/cpu_data

设置cpu->rq->ghost_rq->latched_task,提交该cpu锁定的task。

方便ghost-userspace在该cpu上执行指定task.

**方法**: 与用户态共享同一块物理内存

|

3 提供status_word

1

2

3

4

5

| /sys/fs/ghost/enclave_1/sw_regions/sw_0

进程的task->ghost->status_word从这片内存申请,记录进程的执行时间相关信息;

方便ghost-userspace获取进程执行时间。

**方法**:与用户态共享一块物理内存

每次tick更新进程的task,在dequeue_task_ghost、put_prev_task_ghost、_ghost_task_preempted、_ghost_task_new、ghost_task_yield、ghost_switchto时,更新到task->ghost->status_word中方便用户态获取。

|

6、 ghost调度分析

1

2

3

4

5

6

7

8

9

10

11

12

| _ghost_task_preempted

<- ghost_latched_task_preempted //在ghost.latched_task但是还未pick走在cpu运行,例如

<- ghost_prepare_task_switch //1、线程切换时,next非ghost线程

<- invalidate_cached_tasks //2. 线程迁移到其他cpu,无效latched_task; 3.线程重新设置调度类 4. DEQUEUE_SAVE

<- task_tick_ghost //5. 被agent线程抢占

<- pick_next_ghost_agent //5. 被agent线程抢占

<- release_from_ghost

<- ghost_set_pnt_state //6.被新任务覆盖

------

<- ghost_task_preempted

<- ghost_prepare_task_switch //正在运行的prev被抢占

<- pick_next_ghost_agent //prev被agent任务抢占

|

1

2

| ghost_task_blocked

<- ghost_produce_prev_msgs //prev->ghost.blocked_task=true,进程切换时如果发现prev线程是Dequeue sleep,则为block状态

|

1

2

3

4

5

| task_woken_ghost

<- ghost_class.task_woken //调度类的task_woken

<- ttwu_do_wakeup //wake up等待任务

----------

<- wake_up_new_task //wake up刚创建的任务

|

目前per-cpu ebpf的调度的问题:

1、锁

2、进程调度延迟

1

| https://zhuanlan.zhihu.com/p/462728452 //测量调度延迟

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

| kworker/1:1-250 [001] d... 129.594930: bpf_trace_printk: sched_switch prev kworker/1:1

kworker/1:1-250 [001] d... 129.594932: bpf_trace_printk: -> next swapper/1

ghostctl-1963 [016] dN.. 130.895647: bpf_trace_printk: TASK NEW

ghostctl-1963 [016] dN.. 130.895658: bpf_trace_printk: fffe start

ghostctl-1963 [016] dN.. 130.895659: bpf_trace_printk: fffc select cpu 1

ghostctl-1963 [016] dN.. 130.895663: bpf_trace_printk: add 1 rq have 1 task 1963 gtid

<idle>-0 [001] d... 132.010916: bpf_trace_printk: sched_switch prev swapper/1

<idle>-0 [001] d... 132.010925: bpf_trace_printk: -> next migration/1

migration/1-18 [001] d... 132.010957: bpf_trace_printk: del 1 rq have 0 task 1963 Pid

migration/1-18 [001] d... 132.010969: bpf_trace_printk: run cpu 1 pid 1963 ret 0

migration/1-18 [001] d... 132.010973: bpf_trace_printk: sched_switch prev migration/1

migration/1-18 [001] d... 132.010974: bpf_trace_printk: -> next ghostctl

ls-1963 [001] dN.. 132.013195: bpf_trace_printk: TASK BLOCKED

ls-1963 [001] dN.. 132.013200: bpf_trace_printk: pid 1963 runtime 36ba0a cpu 1

ls-1963 [001] dN.. 132.013201: bpf_trace_printk: block pid 1963 BLOCK but in CPU 1

ls-1963 [001] dN.. 132.013202: bpf_trace_printk: fffc start

ls-1963 [001] dN.. 132.013202: bpf_trace_printk: fffe set cpu 1

ls-1963 [001] d... 132.013207: bpf_trace_printk: sched_switch prev ls

ls-1963 [001] d... 132.013207: bpf_trace_printk: -> next agent_ceph

|

do_idle函数分析:

1

2

| https://www.cnblogs.com/Linux-tech/p/13326567.html

https://www.cnblogs.com/LoyenWang/p/11379937.html

|

resched_cpu_unlocked:

1

2

| https://zhuanlan.zhihu.com/p/500191837 IPI中断类型

https://zhuanlan.zhihu.com/p/373959024 进程切换,地址空间切换

|

内核主动触发调度点:

1

2

| https://blog.csdn.net/pwl999/article/details/78817899 Linux schedule 1、调度的时刻

https://blog.51cto.com/qmiller/4842433 Linux内核进程调度发生的时间点

|

问题定位原因:

1、idle线程无法退出的原因:

1> ipi_scheduler确实会从idle状态退出,走进schedule_idle

2> schedule_idle->__schedule->pick_next_task 走进fair_class的优化分支,因为此时ghost_task还没有入队;

跟踪日志如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| migration/1-18 [001] d... 84.012159: bpf_trace_printk: -> next swapper/1

<idle>-0 [001] dN.. 84.012161: bpf_trace_printk: schedule_idle

<idle>-0 [001] d... 84.012167: bpf_trace_printk: cpu_idle: state 1 cpu 1

ghostctl-3518 [018] dN.. 85.605119: bpf_trace_printk: TASK NEW //ghost task 从cfs 切成ghost调度类

ghostctl-3518 [018] dN.. 85.605125: bpf_trace_printk: fffe start

ghostctl-3518 [018] dN.. 85.605126: bpf_trace_printk: fffc select cpu 1

ghostctl-3518 [018] dN.. 85.605128: bpf_trace_printk: add 1 rq have 1 task 3518 gtid

<idle>-0 [001] d.h. 85.605163: bpf_trace_printk: ipi_entry: Rescheduling interrupts //ipi_resche

<idle>-0 [001] dNh. 85.605167: bpf_trace_printk: ipi_exit: Rescheduling interrupts

<idle>-0 [001] dN.. 85.605169: bpf_trace_printk: cpu_idle: state ffffffff cpu 1 //idle进程退出

<idle>-0 [001] dN.. 85.605173: bpf_trace_printk: schedule_idle //重新调度idle,发现无任务

<idle>-0 [001] d... 85.605179: bpf_trace_printk: cpu_idle: state 1 cpu 1 //重新pick idle

<idle>-0 [001] dN.. 88.012086: bpf_trace_printk: cpu_idle: state ffffffff cpu 1

<idle>-0 [001] dN.. 88.012121: bpf_trace_printk: schedule_idle

<idle>-0 [001] d... 88.012127: bpf_trace_printk: sched_switch prev swapper/1

<idle>-0 [001] d... 88.012128: bpf_trace_printk: -> next migration/1

migration/1-18 [001] d... 88.012138: bpf_trace_printk: del 1 rq have 0 task 3518 Pid

migration/1-18 [001] d... 88.012147: bpf_trace_printk: run cpu 1 pid 3518 ret 0

migration/1-18 [001] d... 88.012149: bpf_trace_printk: sched_switch prev migration/1

migration/1-18 [001] d... 88.012152: bpf_trace_printk: -> next ghostctl

ghostctl-3518 [001] d.h. 88.012156: bpf_trace_printk: ipi_entry: IRQ work interrupts

ghostctl-3518 [001] d.h. 88.012157: bpf_trace_printk: ipi_exit: IRQ work interrupts

ghostctl-3518 [001] d... 88.012185: bpf_trace_printk: ipi_raise: Function call interrupt

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| if (likely(prev->sched_class <= &fair_sched_class &&

rq->nr_running == rq->cfs.h_nr_running)) {

//prev->sched_class == idle_sched_class <= fair_sched_class 满足条件

//rq->nr_running == rq->cfs.h_nr_running == 0 满足条件

p = pick_next_task_fair(rq, prev, rf); //这里返回NULL

if (unlikely(p == RETRY_TASK))

goto restart;

/* Assumes fair_sched_class->next == idle_sched_class */

if (!p) {

put_prev_task(rq, prev);

p = pick_next_task_idle(rq); //这里重新又pick idle,重新进去idle

}

|

搞清楚latched_task与ghost_rq->task_list的关系?

2、内核没有打开强制功能,内核中断不会触发主动调度

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

| .align 6

SYM_CODE_START_LOCAL_NOALIGN(el1_irq)

kernel_entry 1

gic_prio_irq_setup pmr=x20, tmp=x1

enable_da_f

mov x0, sp

bl enter_el1_irq_or_nmi

irq_handler

#ifdef CONFIG_PREEMPTION //内核抢占功能使能

ldr x24, [tsk, #TSK_TI_PREEMPT] // get preempt count

alternative_if ARM64_HAS_IRQ_PRIO_MASKING

/*

* DA_F were cleared at start of handling. If anything is set in DAIF,

* we come back from an NMI, so skip preemption

*/

mrs x0, daif

orr x24, x24, x0

alternative_else_nop_endif

cbnz x24, 1f // preempt count != 0 || NMI return path

bl arm64_preempt_schedule_irq // irq en/disable is done inside

1:

#endif

mov x0, sp

bl exit_el1_irq_or_nmi

kernel_exit 1

SYM_CODE_END(el1_irq)

|

ghos怎么做到主动抢占的呢?

1

| https://www.coolcou.com/linux-kernel/linux-kernel-references/linux-kernel-scheduling-process.html Linux内核调度流程-抢占的发生

|

1

2

| ghost_task_new(rq, prev); //给用户态发送task_new消息

ghost_wake_agent_of(prev); //prev

|

目前信息:ghost消息流程的后面如果需要主动抢占的,会调用wakeup agent,如果是非central的cpu,执行yeild_task->ghost_run->schedule()触发调度。

抢占点设计:复用内核抢占,打开内核抢占功能???

2> ghost-userspace

分两部分: 用户态代码 + ebpf代码

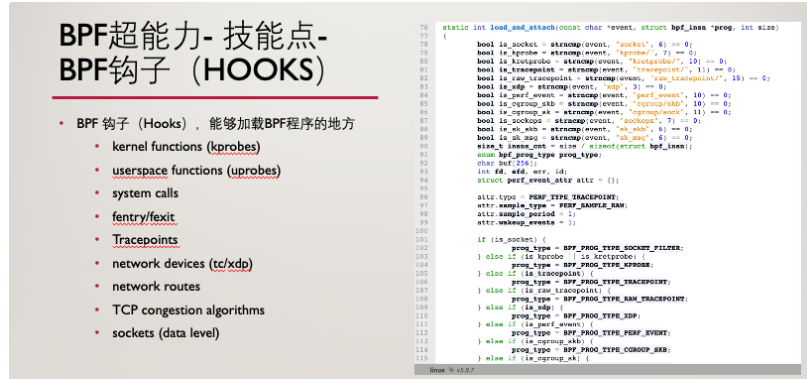

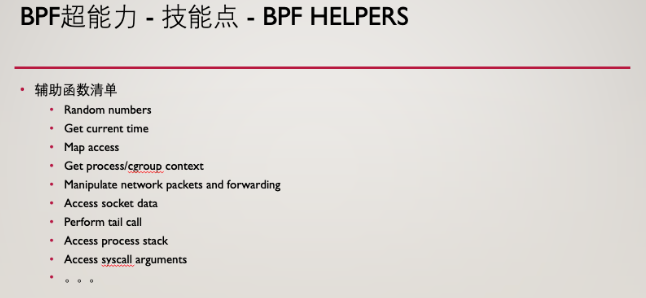

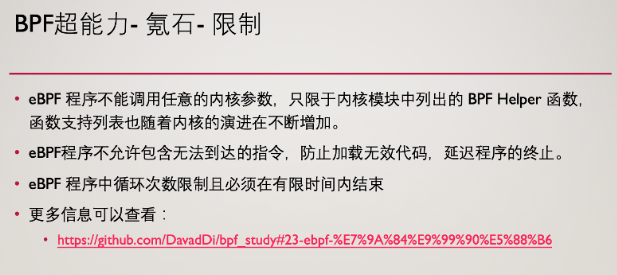

1> ebpf机制

简介

BPF 是 Linux 内核中一个非常灵活与高效的类虚拟机(virtual machine-like)组件, 能够在许多内核 hook 点安全地执行字节码(bytecode )。很多 内核子系统都已经使用了 BPF,例如常见的网络(networking)、跟踪( tracing)与安全(security ,例如沙盒)。reference:https://arthurchiao.art/blog/cilium-bpf-xdp-reference-guide-zh/

1

| https://pwl999.github.io/2018/09/28/bpf_kernel/ //从内核代码层面分析 bpf load verify run...

|

reference:https://zhuanlan.zhihu.com/p/373090595

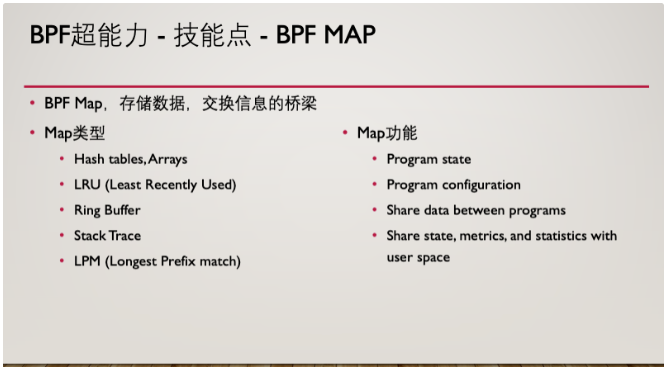

bpf map

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| //map

struct {

__uint(type, BPF_MAP_TYPE_HASH);

__uint(max_entries, MAX_PIDS);

__type(key, u32);

__type(value, struct task_stat);

} task_stats SEC(".maps");

struct {

__uint(type, BPF_MAP_TYPE_PERCPU_ARRAY);

__uint(max_entries, NR_HISTS);

__type(key, u32);

__type(value, struct hist);

} hists SEC(".maps");

|

bpf SEC – bpf hook

1

2

3

4

5

6

7

8

9

| //SEC

SEC("tp_btf/sched_wakeup")

int BPF_PROG(sched_wakeup, struct task_struct *p)

{

if (task_has_ghost_policy(p))

task_runnable(p);

return 0;

}

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

| 引用:https://www.shuzhiduo.com/A/kvJ3q9g7dg/

#include "vmlinux.h" /* all kernel types */

#include <bpf/bpf_helpers.h> /* most used helpers: SEC, __always_inline, etc */

#include <bpf/bpf_core_read.h> /* for BPF CO-RE helpers */

内核空间的BPF代码如下(假设生成的.o文件名为runqslower.bpf.o):

// SPDX-License-Identifier: GPL-2.0

// Copyright (c) 2019 Facebook

/* BPF程序包含的头文件,可以看到内容想相当简洁 */

#include "vmlinux.h"

#include <bpf/bpf_helpers.h>

#include "runqslower.h"

#define TASK_RUNNING 0

#define BPF_F_CURRENT_CPU 0xffffffffULL

/* 在BPF代码侧,可以使用一个 const volatile 声明只读的全局变量,只读的全局变量,变量最后会存在于runqslower.bpf.o的.rodata只读段,用户侧可以在BPF程序加载前读取或修改该只读段的参数【1】 */

const volatile __u64 min_us = 0;

const volatile pid_t targ_pid = 0;

/* 定义名为 start 的map,类型为 BPF_MAP_TYPE_HASH。容量为10240,key类型为u32,value类型为u64。可以在【1】中查看BPF程序解析出来的.maps段【2】 */

struct {

__uint(type, BPF_MAP_TYPE_HASH);

__uint(max_entries, 10240);

__type(key, u32);

__type(value, u64);

} start SEC(".maps");

/* 由于 PERF_EVENT_ARRAY, STACK_TRACE 和其他特殊的maps(DEVMAP, CPUMAP, etc) 尚不支持key/value类型的BTF类型,因此需要直接指定 key_size/value_size */

struct {

__uint(type, BPF_MAP_TYPE_PERF_EVENT_ARRAY);

__uint(key_size, sizeof(u32));

__uint(value_size, sizeof(u32));

} events SEC(".maps");

/* record enqueue timestamp */

/* 自定义的辅助函数必须标记为 static __always_inline。该函数用于保存唤醒的任务事件,key为pid,value为唤醒的时间点 */

__always_inline

static int trace_enqueue(u32 tgid, u32 pid)

{

u64 ts;

if (!pid || (targ_pid && targ_pid != pid))

return 0;

ts = bpf_ktime_get_ns();

bpf_map_update_elem(&start, &pid, &ts, 0);

return 0;

}

/* 所有BPF程序提供的功能都需要通过 SEC() (来自 bpf_helpers.h )宏来自定义section名称【3】。可以在【1】中查看BPF程序解析出来的自定义函数 */

/* 唤醒一个任务,并保存当前时间 */

SEC("tp_btf/sched_wakeup")

int handle__sched_wakeup(u64 *ctx)

{

/* TP_PROTO(struct task_struct *p) */

struct task_struct *p = (void *)ctx[0];

return trace_enqueue(p->tgid, p->pid);

}

/* 唤醒一个新创建的任务,并保存当前时间。BPF的上下文为一个task_struct*结构体 */

SEC("tp_btf/sched_wakeup_new")

int handle__sched_wakeup_new(u64 *ctx)

{

/* TP_PROTO(struct task_struct *p) */

struct task_struct *p = (void *)ctx[0];

return trace_enqueue(p->tgid, p->pid);

}

/* 计算一个任务入run队列到出队列的时间 */

SEC("tp_btf/sched_switch")

int handle__sched_switch(u64 *ctx)

{

/* TP_PROTO(bool preempt, struct task_struct *prev,

* struct task_struct *next)

*/

struct task_struct *prev = (struct task_struct *)ctx[1];

struct task_struct *next = (struct task_struct *)ctx[2];

struct event event = {};

u64 *tsp, delta_us;

long state;

u32 pid;

/* ivcsw: treat like an enqueue event and store timestamp */

/* 如果被切换的任务的状态仍然是TASK_RUNNING,说明其又重新进入run队列,更新入队列的时间 */

if (prev->state == TASK_RUNNING)

trace_enqueue(prev->tgid, prev->pid);

/* 获取下一个任务的PID */

pid = next->pid;

/* fetch timestamp and calculate delta */

/* 如果该任务并没有被唤醒,则无法正常进行任务切换,返回0即可 */

tsp = bpf_map_lookup_elem(&start, &pid);

if (!tsp)

return 0; /* missed enqueue */

/* 当前切换时间减去该任务的入队列时间,计算进入run队列到真正调度的毫秒级时间 */

delta_us = (bpf_ktime_get_ns() - *tsp) / 1000;

if (min_us && delta_us <= min_us)

return 0;

/* 更新events section,以便用户侧读取 */

event.pid = pid;

event.delta_us = delta_us;

bpf_get_current_comm(&event.task, sizeof(event.task));

/* output */

bpf_perf_event_output(ctx, &events, BPF_F_CURRENT_CPU,

&event, sizeof(event));

/* 该任务已经出队列,删除map */

bpf_map_delete_elem(&start, &pid);

return 0;

}

char LICENSE[] SEC("license") = "GPL";

|

bfp辅助函数

辅助函数是一组内核定义的函数集,使 eBPF 程序能从内核读取数据, 或者向内核写入数据(retrieve/push data from/to the kernel)

1

2

3

4

5

6

| 调用约定

辅助函数的调用约定(calling convention)也是固定的:

- R0:存放程序返回值

- R1 ~ R5:存放函数参数(function arguments)

- R6 ~ R9:**被调用方**(callee)负责保存的寄存器

- R10:栈空间 load/store 操作用的只读 frame pointer

|

1

2

| BPF_PROG_TYPE

BPF_CALL

|

1

2

| //介绍BPF_CALL

https://arthurchiao.art/blog/on-getting-tc-classifier-fully-programmable-zh/

|

1

2

3

4

| 内核将辅助函数抽象成 BPF_CALL_0() 到 BPF_CALL_5() 几个宏,形式和相应类型的系 统调用类似。

当前可用的 BPF 辅助函数已经有几十个,并且数量还在不断增加,例如,写作本文时,tc BPF 程序可以使用38 种不同的 BPF 辅助函数。对于一个给定的 BPF 程序类型,内核的 struct bpf_verifier_ops 包含了 get_func_proto 回调函数,这个函数提供了从某个 特定的enum bpf_func_id 到一个可用的辅助函数的映射.

reference:https://arthurchiao.art/blog/cilium-bpf-xdp-reference-guide-zh/#12-%E8%BE%85%E5%8A%A9%E5%87%BD%E6%95%B0

|

1

2

| //get_func_proto介绍

https://blogs.oracle.com/linux/post/bpf-in-depth-bpf-helper-functions

|

1

2

3

4

5

6

7

| //译文附录:一些相关的 BPF 内核实现

https://arthurchiao.art/blog/lifetime-of-bpf-objects-zh/

//map

bpf_create_map() -> bpf_create_map_xattr() -> sys_bpf() -> SYSCALL_DEFINE3(bpf, ...):系统调用 SYSCALL_DEFINE3(bpf, ...) -> case BPF_MAP_CREATE -> map_create():创建 map

//load bpf program

bpf(BPF_PROG_LOAD) -> sys_bpf() -> SYSCALL_DEFINE3(bpf, ...) SYSCALL_DEFINE3(bpf, ...) -> case BPF_PROG_LOAD -> bpf_prog_load():加载逻辑

bpf_prog_load() -> bpf_check():执行内核校验 bpf_check() -> replace_map_fd_with_map_ptr() -> bpf_map_inc():更新 map refcnt

|

2 用户态代码

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| //创建ghost class的进程

GhostThread::GhostThread(KernelScheduler ksched, std::function<void()> work)

: ksched_(ksched) {

GhostThread::SetGlobalEnclaveCtlFdOnce();

thread_ = std::thread([this, w = std::move(work)] { //进程创建

tid_ = GetTID();

gtid_ = Gtid::Current();

// TODO: Consider moving after SchedEnterGhost.

started_.Notify();

if (ksched_ == KernelScheduler::kGhost) {

const int ret = SchedTaskEnterGhost(/*pid=*/0); //如果是kGhost的话,设置为ghost_class调度类。

CHECK_EQ(ret, 0);

}

std::move(w)();

});

started_.WaitForNotification();

}

|

1

2

3

4

| //agent_shinjuku

main

->ghost::AgentProcess<ghost::FullShinjukuAgent<ghost::LocalEnclave>, ghost::ShinjukuConfig>(config)

|

3> experiments

1

2

3

| //antagonist

./agent-sol 或者agent-shinjuku

./antagonist --scheduler=ghost --cpus=1,2,3,4,5,6,7,8

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| //rocksdb

./rocksdb --scheduler=ghost --rocksdb_db_path=/home/rocksdb/

///cfs

./rocksdb --scheduler=cfs --rocksdb_db_path=/home/rocksdb/ --throughput=200000 --num_workers=20 --worker_cpus=1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,21 --batch=1

All:

Stage Total Requests Throughput (req/s) Min (us) 50% (us) 99% (us) 99.5% (us) 99.9% (us) Max (us)

----------------------------------------------------------------------------------------------------------------------------------------------------

Ingress Queue Time 23528589 199938 0 7559 2293590 2537468 2729921 2786260

Repeatable Handle Time 23528589 199938 0 854 177527 206358 247805 254317

Worker Queue Time 23528589 199938 0 0 0 1 4 25983

Worker Handle Time 23528589 199938 10 10 13 14 15 13019

Total 23528589 199938 11 9601 2305599 2549779 2739167 2800522

//ghost

./rocksdb --scheduler=ghost --rocksdb_db_path=/home/rocksdb/ --throughput=200000 --num_workers=20 --batch=1

Stage Total Requests Throughput (req/s) Min (us) 50% (us) 99% (us) 99.5% (us) 99.9% (us) Max (us)

----------------------------------------------------------------------------------------------------------------------------------------------------

Ingress Queue Time(入队排队耗时) 8820243 200041 0 0 32501 47881 71177 80389

Repeatable Handle Time 8820243 200041 0 0 0 0 0 86

Worker Queue Time (查询耗时) 8820243 200041 0 0 0 0 4 694068

Worker Handle Time 8820243 200041 10 10 13 13 15 803878

Total 8820243 200041 10 11 34104 50669 76510 803880

在200000req/s情况下, 1 batch任务, 20个work来处理req时,ghost调度的99%的时延比cfs号很多

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| Thread 1 "rocksdb" received signal SIGABRT, Aborted.

0x00007ffff7b5bdd3 in pthread_kill () from /usr/lib64/libc.so.6

(gdb) bt

#0 0x00007ffff7b5bdd3 in pthread_kill () from /usr/lib64/libc.so.6

#1 0x00007ffff7b0ffc6 in raise () from /usr/lib64/libc.so.6

#2 0x00007ffff7afb457 in abort () from /usr/lib64/libc.so.6

#3 0x00007ffff7e93c44 in ?? () from /usr/lib64/libstdc++.so.6

#4 0x00007ffff7f12212 in std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >::back() () from /usr/lib64/libstdc++.so.6

#5 0x000000000053dc4f in rocksdb::SanitizeOptions(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, rocksdb::DBOptions const&) ()

#6 0x000000000083ce2b in rocksdb::DBImpl::DBImpl(rocksdb::DBOptions const&, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, bool, bool) ()

#7 0x0000000000546c68 in rocksdb::DBImpl::Open(rocksdb::DBOptions const&, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, std::vector<rocksdb::ColumnFamilyDescriptor, std::allocator<rocksdb::ColumnFamilyDescriptor> > const&, std::vector<rocksdb::ColumnFamilyHandle*, std::allocator<rocksdb::ColumnFamilyHandle*> >*, rocksdb::DB**, bool, bool) ()

#8 0x00000000005463a2 in rocksdb::DB::Open(rocksdb::DBOptions const&, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, std::vector<rocksdb::ColumnFamilyDescriptor, std::allocator<rocksdb::ColumnFamilyDescriptor> > const&, std::vector<rocksdb::ColumnFamilyHandle*, std::allocator<rocksdb::ColumnFamilyHandle*> >*, rocksdb::DB**) ()

#9 0x0000000000546158 in rocksdb::DB::Open(rocksdb::Options const&, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, rocksdb::DB**)

()

#10 0x0000000000421e41 in ghost_test::Database::OpenDatabase(std::filesystem::__cxx11::path const&) ()

#11 0x0000000000421f26 in ghost_test::Database::Database(std::filesystem::__cxx11::path const&) ()

#12 0x000000000043fa9b in ghost_test::Orchestrator::Orchestrator(ghost_test::Orchestrator::Options, unsigned long) ()

#13 0x0000000000426789 in ghost_test::GhostOrchestrator::GhostOrchestrator(ghost_test::Orchestrator::Options) ()

#14 0x000000000043a586 in std::_MakeUniq<ghost_test::GhostOrchestrator>::__single_object std::make_unique<ghost_test::GhostOrchestrator, ghost_test::Orchestrator::Options&>(ghost_test::Orchestrator::Options&) ()

|

1

2

3

4

5

6

7

8

9

10

11

12

13

| main

->std::make_unique<ghost_test::GhostOrchestrator>(options) //main.cc

->GhostOrchestrator::GhostOrchestrator(Orchestrator::Options opts) //rocksdb/ghost_orchestrator.cc

-> Orchestrator::Orchestrator(Options options, size_t total_threads) //experiments/rocksdb/orchestrator.cc

//database、thread_pool构造函数 创建了database和thread_pool.

{

->InitThreadPool()

//kernel_schedulers(cfs, ghost, ghost,..) thread_work(GhostOrchestrator::LoadGenerator, GhostOrchestrator::Worker, GhostOrchestrator::Worker, ..)

//thread_pool().Init(kernel_schedulers, thread_work);

->InitGhost()

//设置进程ghost参数

}

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| // thread_pool.Init实现: 根据KernelScheduler[]类型设置线程sched_attr,并执行thread_work[]

27 void ExperimentThreadPool::Init(

28 const std::vector<ghost::GhostThread::KernelScheduler>& ksched,

29 const std::vector<std::function<void(uint32_t)>>& thread_work) {

30 CHECK_EQ(ksched.size(), num_threads_);

31 CHECK_EQ(ksched.size(), thread_work.size());

32

33 threads_.reserve(num_threads_);

34 for (uint32_t i = 0; i < num_threads_; i++) {

35 threads_.push_back(std::make_unique<ghost::GhostThread>( //根据ksched的type设置进程的sched_attr.

36 ksched[i],

37 std::bind(&ExperimentThreadPool::ThreadMain, this, i, thread_work[i]))); //ThreadMain函数执行thread_work[i]

38 }

...

45 void ExperimentThreadPool::ThreadMain(

46 uint32_t i, std::function<void(uint32_t)> thread_work) {

47 while (!ShouldExit(i)) {

48 thread_work(i);

49 }

50 num_exited_.fetch_add(1, std::memory_order_release);

51 }

|

3> ghost用户态工具的编译

1 bazel

1

2

3

4

| //增加-g参数

bazel build -c opt --copt="-g" --cxxopt="-g" --host_copt="-g" --host_cxxopt="-g" ...

//~/.bazelrc

build --cxxopt='-std=c++17' --cxxopt='-g'

|

简介

Bazel的目标之一是创建一个构建系统,在这个系统中,构建目标的输入和输出是完全指定的,因此构建系统可以精确地知道它们的输入和输出,这样可以更准确地分析和确定构建系统依赖图中过时的构建工件。使依赖图分析更加准确,通过避免重新执行不必要的构建目标,从而可能改善构建时间。通过避免构建目标可能依赖于过时的输入工件的错误,提高了构建的可靠性。

1

| https://docs.bazel.build/versions/main/command-line-reference.html help文件

|

依赖

1

| cmake make gcc gcc-c++ elfutils-devel numactl-devel numactl-libs libbpf libbpf-devel bcc bpftools libcap-devel llvm llvm-devel python3-pip

|

编译

1

2

| ##-g参数

bazel build -c opt --copt="-g" --cxxopt="-g" --host_copt="-g" --host_cxxopt="-g" ...

|

1

2

3

4

5

6

7

8

9

| //http文件服务器搭建:

https://blog.51cto.com/soysauce93/1725318

https://www.cnblogs.com/zhuyeshen/p/11693362.html

//遇事不行就关闭防火墙:

systemctl stop firewalld.service

Forbidden:You don't have permission to access this resource.

//遇到说权限不足的,注意看一下目录权限

//修改文件web服务器

sed -i "s/https:\/\/github.com\/bazelbuild\/rules_foreign_cc\/archive\//http:\/\/x.x.x.x\/bazel\//g" WORKSPACE

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| 直接编译方法:

1、解决unable to find valid certification path to requested target

1>先按照这个>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

//指定位置和密码

alias bazel='bazel --host_jvm_args=-Djavax.net.ssl.trustStore=/usr/lib/jvm/java-11-openjdk-11.0.9.11-4.oe1.x86_64/lib/security/cacerts --host_jvm_args=-Djavax.net.ssl.trustStorePassword=changeit'

bazel --host_jvm_args=-Djavax.net.ssl.trustStore=/usr/lib/jvm/java-11-openjdk-11.0.9.11-4.oe1.x86_64/lib/security/cacerts --host_jvm_args=-Djavax.net.ssl.trustStorePassword=changeit build agent_shinjuku

2> 安装对应网站证书 >>>>>>>>>>>>>>>>>>>>>>>>>

//导入证书:点击左上角的锁icon -> 证书 -> 详细信息 -> 复制到文件 -> 选择Base64编码的X.509格式,保存证书到本地目录

keytool -import -file /root/caCert.cer -keystore /usr/lib/jvm/java-11-openjdk-11.0.9.11-4.oe1.x86_64/lib/security/cacerts -trustcacerts -alias github_crt -storepass changeit

2、为了快捷下载linux包,采用本地web文件服务器

systemctl start httpd

systemctl stop firewalld.service

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| //编译遇到pip CERTIFICATE_VERIFY_FAILED失败:

SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: self signed certificate in certificate chain (_ssl.c:1123)

解决:ssh-keygen

//etc/pip.conf

[root@localhost ghost-userspace]# cat ~/.pip/pip.conf

[global]

trusted-host = pypi.python.org

pypi.org

files.pythonhosted.org

verify = false

//etc/pip.conf

[root@localhost ghost-userspace]# cat /etc/pip.conf

[global]

trusted-host = pypi.python.org

pypi.org

files.pythonhosted.org

[http]

sslVerify = false

[https]

sslVerify = false

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

| //编译失败 'always_inline' 'uint8x16_t vaesmcq_u8(uint8x16_t)'

In file included from external/com_google_absl/absl/random/internal/randen_hwaes.cc:229:

/usr/lib/gcc/aarch64-linux-gnu/10.3.1/include/arm_neon.h: In function 'Vector128 {anonymous}::AesRound(const Vector128&, const Vector128&)':

/usr/lib/gcc/aarch64-linux-gnu/10.3.1/include/arm_neon.h:12332:1: error: inlining failed in call to 'always_inline' 'uint8x16_t vaesmcq_u8(uint8x16_t)': target specific option mismatch

12332 | vaesmcq_u8 (uint8x16_t data)

//解决: + cpu_aarch64

https://github.com/abseil/abseil-cpp/commit/2e94e5b6e152df9fa9c2fe8c1b96e1393973d32c

":cpu_x64_windows": ABSL_RANDOM_HWAES_MSVC_X64_FLAGS,

":cpu_k8": ABSL_RANDOM_HWAES_X64_FLAGS,

":cpu_ppc": ["-mcrypto"],

//+ ":cpu_aarch64": ABSL_RANDOM_HWAES_ARM64_FLAGS,

# Supported by default or unsupported.

"//conditions:default": [],

@@ -70,6 +71,7 @@ def absl_random_randen_copts_init():

"darwin",

"x64_windows_msvc",

"x64_windows",

// + "aarch64",

]

for cpu in cpu_configs:

native.config_setting(

|

1

2

3

| //arm64没有pause指令

/tmp/ccVaJFns.s:15314: Error: unknown mnemonic `pause' -- `pause'

//解决: pause -> yield

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

| 1> 遇到编译错误

ERROR: /root/.cache/bazel/_bazel_root/7e9b6806b9417f00ba913f77eac102ca/external/rules_foreign_cc/toolchains/BUILD.bazel:77:22: @platforms//os:windows is not a valid configuration key for @rules_foreign_cc//toolchains:built_make

//直接注释解决: 看这个暴力,非常有用

//rules_foreign_cc/toolchains/BUILD.bazel:77

native_tool_toolchain(

name = "built_make",

path = select({

"//conditions:default": "$(execpath :make_tool)/bin/make",

}),

target = ":make_tool",

)

//rules_foreign_cc/foreign_cc/private/framework/platform.bzl:33

33 def framework_platform_info(name = "platform_info"):

34 """Define a target containing platform information used in the foreign_cc framework"""

35 _framework_platform_info(

36 name = name,

37 os = select({

38 "//conditions:default": "unknown",

39 }),

40 visibility = ["//visibility:public"],

41 )

//参考解决,实际没用

https://docs.bazel.build/versions/main/platforms-intro.html

BUILD文件里指定编译目标,platforms, cpus:

https://docs.bazel.build/versions/main/configurable-attributes.html

Example: Constraint Values

platform(

name = "linux_x86",

constraint_values = [

"@platforms//os:linux",

"@platforms//cpu:x86_64",

],

)

https://blog.csdn.net/don_chiang709/article/details/105727621

wget -c https://github.com/bazelbuild/bazel/releases/download/3.0.0/bazel-3.0.0-installer-linux-x86_64.sh

chmod +x bazel-3.0.0-installer-linux-x86_64.sh

./bazel-3.0.0-installer-linux-x86_64.sh --user

https://john-millikin.com/bazel-school/toolchains

@bazel_tools//platforms:cpu

@bazel_tools//platforms:arm

@bazel_tools//platforms:ppc

@bazel_tools//platforms:s390x

@bazel_tools//platforms:x86_32

@bazel_tools//platforms:x86_64

@bazel_tools//platforms:os

@bazel_tools//platforms:freebsd

@bazel_tools//platforms:linux

@bazel_tools//platforms:osx

@bazel_tools//platforms:windows

|

1

2

3

4

| //clean:

bazel clean --expunge

//编译顺序:

base -> base-test -> ghost -> shared-> bazel build ... //编译所有的

|

1

2

3

| //bpf_skeleton -> 定义bpf/bpf.bzl中

http://manpages.ubuntu.com/manpages/focal/man8/bpftool-gen.8.html

-> bpftools

|

1

2

| //[Bazel]自定义工具链

https://cloud.tencent.com/developer/article/1677379

|

1

2

| //bazel toolchain bazel 工具链

https://kekxv.github.io/2021/08/06/bazel%20toolchain%2001/

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| //查看本地编译配置

https://www.coder.work/article/2786053

`bazel info output_base`/external/local_config_cc

---------------

[root@localhost ghost-userspace]# ll `bazel info output_base`/external/local_config_cc

total 32K

lrwxrwxrwx. 1 root root 126 Nov 19 10:06 armeabi_cc_toolchain_config.bzl -> /root/.cache/bazel/_bazel_root/7433de7b30d6d58a288c26dfb16d43d1/external/bazel_tools/tools/cpp/armeabi_cc_toolchain_config.bzl

-rwxr-xr-x. 1 root root 4.4K Nov 19 10:06 BUILD

-rwxr-xr-x. 1 root root 552 Nov 19 10:06 builtin_include_directory_paths

lrwxrwxrwx. 1 root root 123 Nov 19 10:06 cc_toolchain_config.bzl -> /root/.cache/bazel/_bazel_root/7433de7b30d6d58a288c26dfb16d43d1/external/bazel_tools/tools/cpp/unix_cc_toolchain_config.bzl

-rwxr-xr-x. 1 root root 739 Nov 19 10:06 cc_wrapper.sh

drwxr-xr-x. 3 root root 4.0K Nov 19 10:06 tools

-rw-r--r--. 1 root root 111 Nov 19 10:06 WORKSPACE

|

1

2

3

4

5

6

7

8

9

10

| //rules_foreign_cc支持的cmake、make、ninja版本

//rules_foreign_cc/foreign_cc/repositories.bzl

register_default_tools = True,

cmake_version = "3.21.2",

make_version = "4.3",

ninja_version = "1.10.2",

cmake 3.19.2

make 4.3

ninja_build 1.8.2

|

编译所有的ghost-userspace的二进制:

1

2

3

4

5

6

7

|

[root@localhost ghost-userspace]# bazel build ...

INFO: Analyzed 62 targets (17 packages loaded, 1505 targets configured).

INFO: Found 62 targets...

INFO: Elapsed time: 48.090s, Critical Path: 15.80s

INFO: 531 processes: 531 linux-sandbox.

INFO: Build completed successfully, 798 total actions

|

4> 思考

1、现在是CPU的进程调度放到用户态,这个有什么优势? 还能继续做些什么?

2、能结合反馈式智能调度吗?

3、进程<—->资源调度?

4、CFS调度器的参数是全局的? 如果有资源隔离的话,可以将CFS也可以按照隔离域独立设置参数吗?

5、内核调度器中bpf的拓展, 那内存部分ebpf可以有所作为吗?

1

2

3

4

5

6

7

8

9

10

11

| https://www.ebpf.top/post/cfs_scheduler_bpf/ cfs的bpf优化.

https://jirnal.com/train-of-ebpf-in-cpu-scheduler/

TRAIN OF EBPF IN CPU SCHEDULER

eBPF has been frail broadly in efficiency profiling and monitoring. In this affirm, I am going to exclaim a location of eBPF positive aspects that aid show screen and beef up cpu scheduling performances. These positive aspects consist of:

Profiling scheduling latencies. I am going to focus on an software program of eBPF to amass scheduling latency stats.

Profiling useful resource effectivity. For background, I am going to first introduce the scheduler characteristic core scheduling which is developed for mitigating L1TF cpu vulnerability. Then I am going to introduce the eBPF characteristic ksym which enables this software program and affirm how eBPF can support yarn the forced lazy time, a invent of cpu usage inefficiency caused by core scheduling.

The third software program of eBPF is to encourage userspace scheduling. ghOSt is a framework open sourced by Google to enable overall-goal delegation of scheduling protection to userspace processes in a Linux ambiance. ghOSt uses BPF acceleration for defense actions that deserve to occur closer to scheduling edges. We use this to maximize CPU utilization (pick_next_task), decrease jitter (task_tick elision) and preserve watch over tail latency (select_task_rq on wakeup). We’re also experimenting with BPF to place in pressure a scaled-down variant of the scheduling protection while upgrading the principle userspace ghOSt agent.

|

三、扩展

1>、调度激活机制

1

2

3

4

5

6

7

8

9

10

11

| https://www.codenong.com/cs105549070/ 调度激活机制

upcall具体做了什么?

内核发现用户进程的一个线程被block了(比如调用了一个被block的system call,或者发生了缺页异常。

内核通过进程的运行时线程被block(还会告知被block的线程的详细信息),这个就是upcall

进程的运行时系统接收到内核发来的消息,得知自己的线程被block

运行时系统先将当前线程标识为block(会保存在线程表-thread table)

运行时系统从当前线程表(thread table)选择一个ready的线程进行运行

至此已经完成了当一个进程内的一个用户线程被block(比如发生缺页异常)时,不会导致整个进程被block,这个进程的内的其他用户线程还可以继续运行(类似于内核线程)。

当内核发现之前被block的线程可以run了,同样会通过upcall通知运行时系统,运行时系统要么马上运行该线程,要么把该线程标志位ready放入线程表。

|

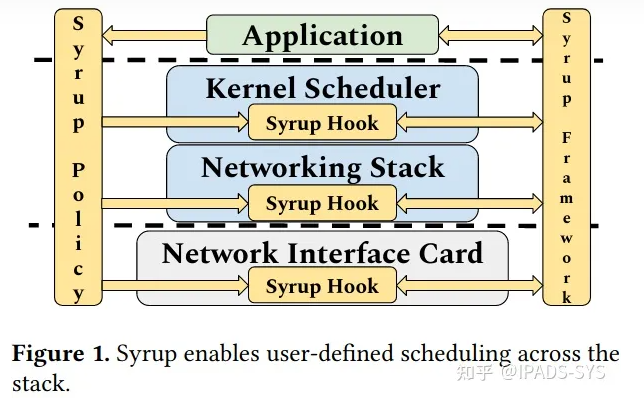

2>、基于网络栈的用户态定制调度

Syrup: User-Defined Scheduling Across the Stack

1

| http://stanford.edu/~kkaffes/papers/syrup.pdf

|

1

| https://zhuanlan.zhihu.com/p/464560315

|

3> fast preemt快速抢占

https://ubuntu.com/blog/industrial-embedded-systems-ii Low latency Linux kernel for industrial embedded systems – Part II

4> 轻量级线程LWT

PS:

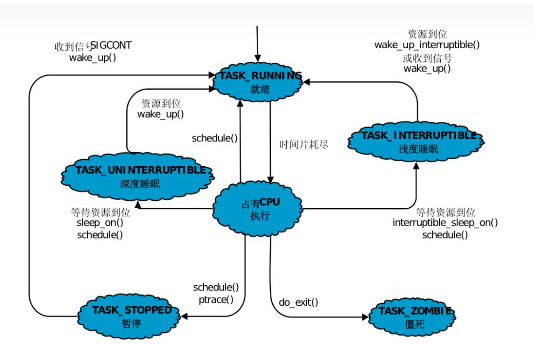

1> linux内核进程状态机转化

1

| https://blog.51cto.com/ciellee/3411615 操作系统进程状态和状态转换详解

|

1

2

| shenango:

Process Context Identifiers (PCIDs) allow page tables to be swapped without flushing the TLB

|

2> 可监控的资源

| 监控类型 |

| end-to-end latency |

| schedule latency |

| Memory Bandwidth |

| LLC miss rate |

| Network Bandwidth |

| Disk Bandwidth |

应用特征不同,资源监控(cpu、内存、磁盘、网络)使用率和策略应该是定制化的。或者是性能特征来作为资源调度的依据

3> 现有混布与ghost方案对比

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| 1、QOS混部方案当前的缺点及问题

QOS混布方案是在内核CFS增加定制,由于CFS本身的复杂度很高,导致新增调度策略实现难度大,扩展性弱。

2、Ghost方案的主要特点,及对比混部的优势

1> 可定制的调度实现框架,可以结合场景特点来设计满足不同延迟、吞吐量要求的调度器。

2> 在用户态设计调度策略,独立于内核的CFS调度器,降低调度器实现难度,扩展性强。

3> ghost可以灵活部署,可以支持动态升级回滚,不需要重新编译内核、重启。

ghost就是提供了调度器的设计、实现、部署的一种新范式,与传统的调度器设计有很大区别。

3、Ghost框架灵活性等特点,是否有解决其他问题的潜力

有解决其他问题的潜力;

1> ghost设计之初是在google的数据中心使用,实现在高吞吐量的前提实现低尾时延;

2> 由于具有灵活的可定制调度策略的特性,混布场景自然也可以使用

3> 异构设备场景下的任务调度分发或许也是个很好的场景。

|